Hello,

First of all, I have to thank you for the software and the effort you have put in the implementation and documentation. I will try to give a brief overview of the problem I am tackling and see whether you have had any previous experience that could be useful.

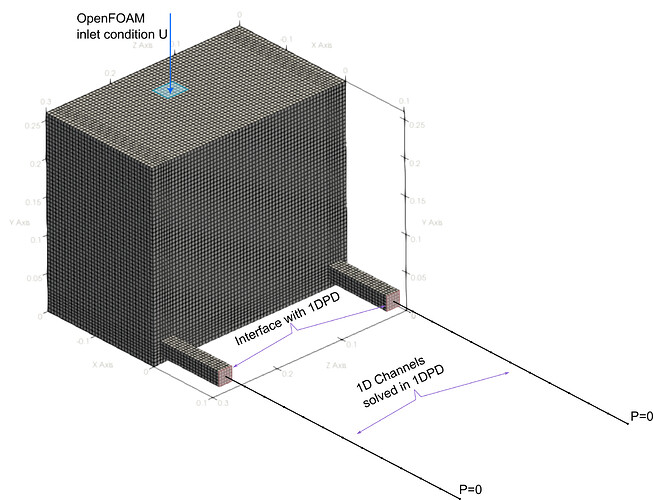

The physical problem we are trying to solve is the flow through a series of distributing manifolds that are connected by a (high) number of (relatively) 1D channels. The brute-force approach to the problem is to include these many channels into the 3D fully-turbulent CFD analysis. However, by doing so, most of the computational cost is invested in a rather boring turbulent flow in 1D pipe. In the other extreme, I could use a simple 1D approach for the distribution manifolds; but in that way I would lose many important flow features that happen in the manifolds that surely cannot be modelled as 1D flow. Some time ago, I wrote THIS post with a deeper description of my problem in case you are interested. As you may see, the post received an overwhelming amount of support from the OpenFOAM community.

Ideally, I would like to use OpenFOAM to solve the flow in the manifolds (3D CFD) and one in-house code that we have developed over the last years to solve the flow in pipes (1DPD). As you may have already realized, I think that preCICE (FF module) is exactly what I am looking for.

We spent a few days installing things and modifying our in-house code so it can communicate with preCICE. This code is written in python so we will use the wrapper for this language. After some changes here and there, we do have both programs nicely talking to each other and everything seems fine.

Our final problem will involve several manifolds in series connected by different channels. Therefore, some of the interfaces would be manifold inlets and some others would be manifold outlet. However, for the first try let’s keep it simple. We are modelling the flow in a square box (roughly the size of a shoe box) which has one inlet in the top in which we are imposing an inlet velocity and two outlets in one of the lateral walls. The two outlets are connected to two straight pipes that are modelled with our 1DPD in-house code. The interface takes the flowrate from each of the 2 OpenFOAM outlets and imports it into 1DPD. Then we solve the steady-state 1D approximation (simple turbulent flow in pipes) which provides the pressure drop. This pressure is then passed back from 1DPD into each of the OpenFOAM outlets.

So far, we have tried with a serial-explicit coupling in which we allow simpleFoam solver (that looks for a steady state solution) to have enough iterations between two successive preCICE-1DPD communications so as to reach a reasonable solution. After a considerable amount of try and error, the solution has only worked if we limit the pressure change imposed by 1DPD to OpenFOAM to very small changes (by means of a relaxation factor). We believe that part of the problem may be caused because the pressure mapping (1DPD->OpenFoam) is imposed in the faceCenters instead of in faceNodes (which causes backflow in some cases). However, we are not entirely sure the problem comes from this source.

In order to change perspectives, we are now trying with serial-implicit coupling through preCICE. However, we are reaching the following error:

---[precice] ERROR: The required actions write-iteration-checkpoint are not fulfilled. Did you forget to call "markActionFulfilled"?

---[precice] ERROR: Sending data to another participant (using sockets) failed with a system error: write: Broken pipe. This often means that the other participant exited with an error (look there).

However

cowic = precice.action_write_iteration_checkpoint() is called.

For reference, I quote here the Python code where we call both our in-house code 1DPD and the preCICE interface:

import DPD #in-house program 1DPD to solve steady-state flow in 1D channels

import precice

#% ---- Init PRECICE-------------------------------------------------------------#

configuration_file_name = '../precice-config.xml'

participant_name = 'fluid_1d'

solver_process_index = 0

solver_process_size = 1

pp = precice.Interface(participant_name,configuration_file_name,solver_process_index,solver_process_size)

mesh_id = pp.get_mesh_id('1d_mesh')

coric = precice.action_read_iteration_checkpoint()

cowic = precice.action_write_iteration_checkpoint()

#% ---- Init 1DPD-------------------------------------------------------------#

interface_mesh=DPD.initialize()

#% ---- Set interface mesh into precice-------------------------------------------------------------#

vertex_ids = pp.set_mesh_vertices(mesh_id,interface_mesh)

id_p = pp.get_data_id('Pressure',mesh_id)

id_u = pp.get_data_id('Velocity',mesh_id)

#% ---- Time loop-------------------------------------------------------------#

relaxation_factor=0.01

t = 0.0

dt_max = pp.initialize()

while pp.is_coupling_ongoing():

#READ DATA FROM OPENFOAM

if pp.is_read_data_available():

u_readed = pp.read_block_vector_data(id_u,vertex_ids)

if pp.is_action_required(cowic):

#no action is required in 1DPD in order to saveOldState

#Because the solver is steady-state and independent of initial conditions

pp.mark_action_fulfilled(cowic)

#SOLVE 1DPD

DPD.updateBC(u_readed)

p_to_send=DPD.solve(relaxation_factor)

if pp.is_action_required(cowic):

pp.mark_action_fulfilled(cowic)

#SEND DATA BACK TO precice->OPENFOAM

pp.write_block_scalar_data(id_p,vertex_ids,p_to_send)

#ADVANCE TIME

dt_max = pp.advance(dt_max)

t += dt_max

if pp.is_action_required(coric):

#no action in order to reloadOldState

pp.mark_action_fulfilled(coric)

pp.finalize()

And I attach the config file:

precice-config.xml (2.4 KB)

So, our questions are:

-

Do you think Pressure mapping in FF module may be available for faceNodes? In fact, do you think this is the source of our problems?

-

Do you see any overall problem in our approach or do you think that our problem is more suitable for other strategy?

-

Do you think our code for serial-implicit coupling has any problem that prevents its correct execution?

I hope I could summarize the problem so you may easily understand. However, if you have any other question or need more information on more details on the coupling code, please let me know.

Best regards

Eduardo

Edit: formatting issues